From analysing gaze to creating communication 2019, United Kingdom, London

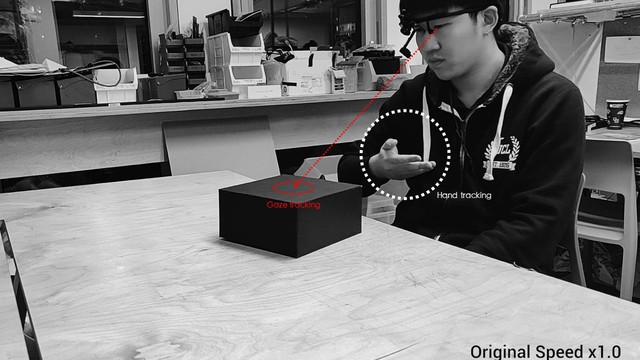

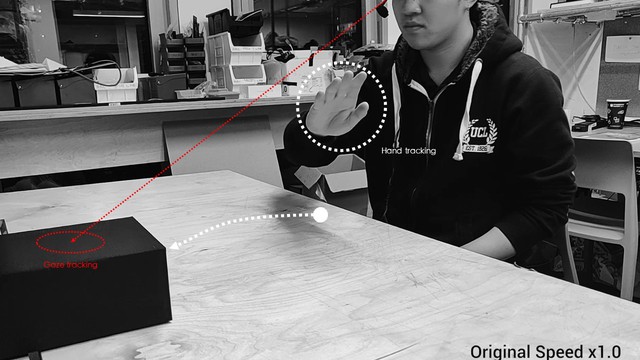

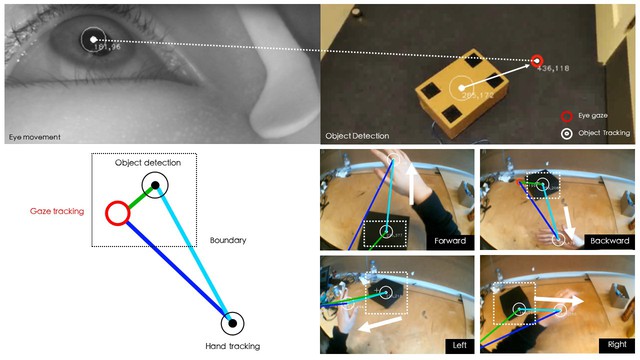

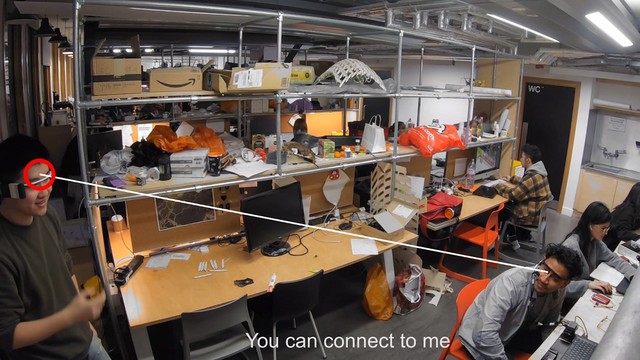

A wearable device that uses eye gaze to interact with objects and people in the environment

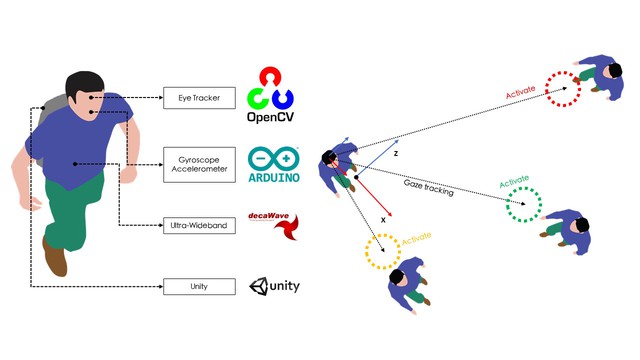

This project seeks to extend the way we communicate with others or interact with devices using eye-tracking and positioning systems.

Our eyes' movement has the potential to provide useful information for a variety of scene recognition and visual perception problems as visual perception has emerged as a critical part of research in human-computer interaction and spatial understanding. Moreover, eye movements are linked to cognitive processes of communication, such as engagement, attention and expression. A wearable eye-tracking system was developed for tracking three-dimensional directions of eye gaze, capturing a wide range of hand gestures, recognising different objects with three cameras and localising people's movement in built environments simultaneously with the use of IMU and ultra-wideband tracking systems, which creates a novel way of utilising eye gaze to communicate and interact with people and devices.

Details

Team members : Zehao Qin

Supervisor : Associate Professor: Ava Fatah gen Schieck

Institution : Bartlett School of Architecture, University college London

Descriptions

Credits

Zehao Qin

Zehao Qin

Zehao Qin

Zehao Qin

Zehao Qin

Zehao Qin

Zehao Qin