Find your Augmented mascot! 2021, United Kingdom, London

A participatory urban AR supported by spatial and haptic cognition.

This project explores playful ways to support participatory urbanism through an AR digital platforms. The aim is to strengthen citizen’s digital rights, and support crowd intelligence and co-creation through spatial and haptic cognition. A study was carried out to explore how participants perceive, accept, host, and resolve Augmented objects and their cognition of the same concerning the Location-based system. The human mind is very complex to completely understand its nature of cognition. The user's spatial cognition for this is influenced by several factors. Spatial memory, spatial comprehension, and visual fixation are major factors influencing the spatial cognition of the user. The other focus is on haptic cognition referring to the feel and sense of touch, vibration, movement, etc. Can the user's cognition of space and haptic help us to get a clearer picture of their behavioural study and answer the hypotheses that revolve around human cognition and their unconscious behavioural responses.

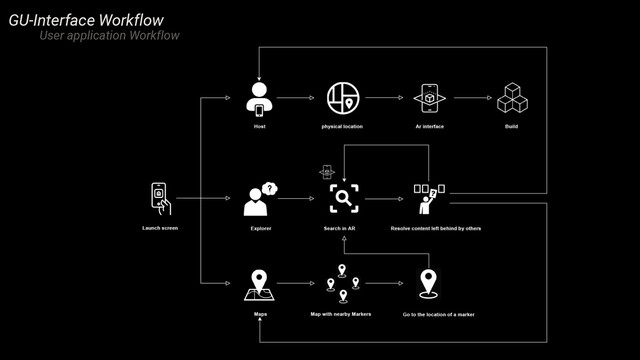

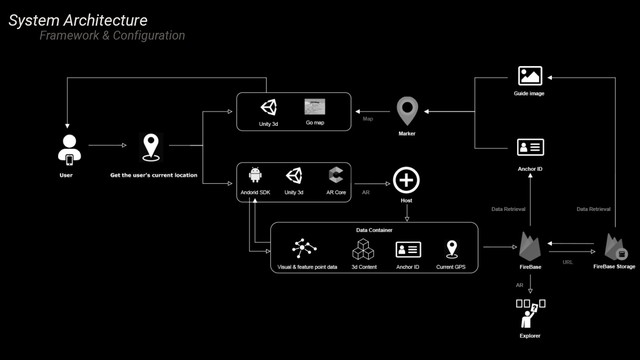

To understand the effect of cognition on spatiality and haptic interfaces, we created a game to study and observe the effects of different cognitive factors by tweaking the game and its interface. The game was aimed to intrigue the user to find an Augmented object with support maps and guides to locate them. The immediate response, users cognition of the space and interface, the way of approach, the weightage of importance to physical and virtual, the excitement of finding the Augmented reality object, all such responses were studied. These findings further helped us to better the different interfaces of the game, their features, and understanding of behaviour and cognition behind them. The study focuses on : Spatial memory: The ability to store information about a space. Spatial Comprehension: The ability to understand a space. Visual fixation: The visual level of focus a person has on a project. Hosting objects involves obtaining the visual data and feature point via device camera, current GPS, the host model after the player constructs his desired 3D content is packaged and culminated with the unique anchor ID which, as a dataset are stored at the Firebase cloud Storage, this marks the end of hosting side. The game continuously monitors the device location to render the markers of neighbourhood objects with the help of the cloud servers filtering the previous dataset for nearby contents. Also, a guide image is displayed upon request representing the chosen marker. Resolving objects are rendered to the user upon encountering the physical space when the visual data is presented before the AR-camera in the explore interface. On the whole, tweaking the game to get specific information in the backend reveals data about many conscious, unconscious decisions and behaviours exhibited by participants during the gameplay which can be interpreted to find meaningful information about human cognition on spatiality and haptics. *Links to initial studies and analysis below :*

https://drive.google.com/file/d/1fUAw7kmbll0AHApG-4o_Y_jyW4BjQ5F3/view?usp=sharing

https://drive.google.com/file/d/1UnCO2GdU7dUcA3sZd0OW4L1Yq2c69yeX/view?usp=sharing

Details

Team members : Somasundaram Sathish, Xu Yan, Tian Zhenan

Supervisor : Associate Professor: Ava Fatah gen Schieck

Institution : Bartlett School of Architecture, University college London

Descriptions

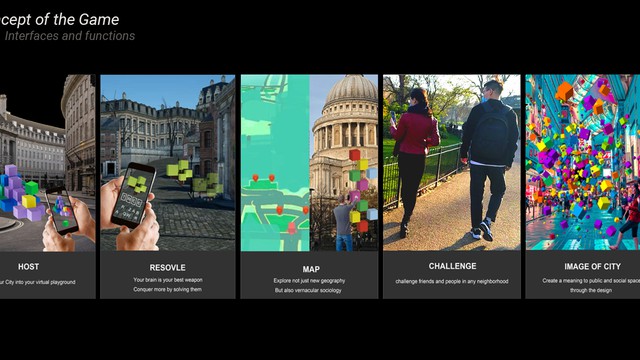

Technical Concept : The game involves a host, who builds augmented blocks on the preferred locations. Then the players who resolve are spread across in search of these augmented blocks. To facilitate them, maps are provided with markers and guide images to ease the navigation. The three key interfaces that construct the game are : •Build & Host interface: where the host builds Augmented objects on the space of his interest. •Map interface: where the player gets hints and help to navigate to the Augmented object. •Explore interface: where the player can explore various places of possible interventions. Overall, tweaking the game to get specific information in the backend reveals data about many conscious, unconscious decisions and behaviours exhibited by participants during the gameplay which can be interpreted to find meaningful information about human cognition on spatiality and haptics.

Credits

Sathish, Zhenan, Yan

Sathish, Zhenan, Yan

Sathish, Zhenan, Yan

Sathish, Zhenan, Yan

Sathish, Zhenan, Yan

Sathish, Zhenan, Yan